In Bayesian statistics [based on those opening words I know I’ve already lost 50% of you] there is a concept of a priori and a posteriori beliefs. A prior probability distribution (also simply called a prior) indicates that prior to making an observation I already have some beliefs about the nature of the system being observed. For example, when you pick up a coin with the intent to flip it, in your mind you expect that the likelihood of heads and tails will be more or less equal. You have a uniform prior because you consider the probability of heads to be 50% and the probability of tails to be 50%.

In Bayesian statistics [based on those opening words I know I’ve already lost 50% of you] there is a concept of a priori and a posteriori beliefs. A prior probability distribution (also simply called a prior) indicates that prior to making an observation I already have some beliefs about the nature of the system being observed. For example, when you pick up a coin with the intent to flip it, in your mind you expect that the likelihood of heads and tails will be more or less equal. You have a uniform prior because you consider the probability of heads to be 50% and the probability of tails to be 50%.

Other sorts of priors are possible. You might give your favorite sports team higher odds of winning the national championship than another team, simply because they’re your favorite (or, alternately, because they’re awesome!) You could suppose that the likelihood of a Democratic candidate winning the presidency to be much higher than that of a Republican candidate due to the unpopularity of the incumbent party. Priors are essentially preexisting beliefs, and they get us pretty far in many situations. But, usually we want to leave the blissful world of prior beliefs and make some observations.

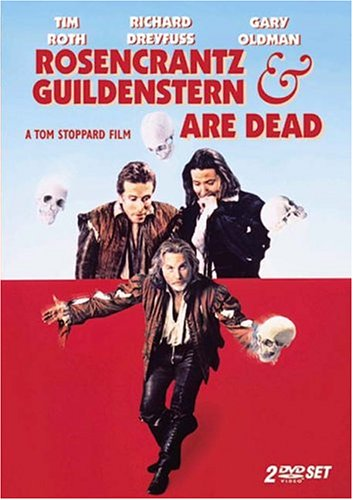

Depending on the strength of your prior, even after seeing a coin land heads up five thousand times in a row (something like that happened in Rosencrantz and Guildenstern Are Dead, which is one of the few things I actually got myself to learn in my statistics class) you might still pretty much believe that the coin was a fair coin; or, you might have already adjusted your beliefs and concluded that the coin is extremely biased in favor of heads. In either case, whether your new set of beliefs is identical to your prior beliefs, or whether they are radically different, you are now dealing with a posterior distribution, or posterior for short. A posterior is what you believe after examining your prior assumptions in the light of new evidence.

Whether we like it or not, we humans tend to operate in this Bayesian manner. Try as we might, we bring prior beliefs to the table in any situation. Whether or not you had tabula rasa at birth, you certainly don’t now, and you are affected by previous experiences. We update our beliefs in the light of new evidence. A stubbornly held belief might never really change, which indicates a nigh-unto infinite strength of prior relative to updated evidence. But in many situations we eventually acknowledge the weight of the evidence and change our beliefs. Or, you might say, our beliefs change, and they change us in turn.

So this guarantees a neverending march towards truth, as long as people allow evidence to influence them, right? Well, maybe. That assumes that your observations of the system are really representative of the nature of the system, and that they represent the entire system and not just one part of it. It assumes that the system even is something akin to what we think it is, or that we model the system in a way that is at least remotely connected to its actual makeup. And it assumes that we didn’t just by chance get a very strange sample that pushes our beliefs in the wrong direction.

In other words, there are lots of pitfalls for the would-be bayesian perfection of knowledge. And to think that we make so many choices based on such an imperfect system of prior and posterior belief, perception, and evaluation!

Leave a Reply